My experience selling shovels to gold miners, part 1: WhereTo

First Substack, let's see how this goes ...

Intro

There’s a common saying that floats around tech circles - “during a gold rush, sell shovels”. It’s a really powerful phrase, that intuitively makes sense to us - why risk the burden of never finding gold, when you can capitalize on human greed instead - a much surer thing than some ancient, sparsely found metal.

But is it too obvious? Well, in the midst of the pandemic, having lost my summer internship @ Microsoft, I had just the chance to find out.

WhereTo Journey

I ended up landing on my feet - getting a job as an Associate Product Manager (APM) Intern @ a fast-growing ed-tech startup in India. Simultaneously, I’d resurrected a project I’d been working on since sophomore year - building a recommendation engine of cool things to check out when you’re visiting new cities [Given that I know you like X places in Y cities, can I recommend places for you to check out in Z].

At some point during my time at the co., I came across an internal effort to try and personalize learning for students on the platform. Spotting a chance to build something cool and maybe get paid for it, I reached out to the CSO (Chief Strategic Officer) and proposed the idea of an external team, consisting of my friends and I, taking a stab at this problem.

While she was initially interested in this idea, she proposed we tackle a much larger problem for them - saving on content acquisition costs by automatically tagging each piece of acquired content to the place in the content hierarchy (subject / chapter / concept etc. where they belong).

This was a far more menial problem than the idea of ‘personalization’. It definitely didn’t feel as cool, but that’s when I hit key learning#1: your product isn’t the thing, it’s the thing that gets you to the thing i.e. your product could be the coolest thing in the world, but unless someone sees an explicit tangible benefit to them, it’s hard to convince people to buy it. In our case, that meant that while personalization was cool it didn’t have an explicit monetary outcome (besides maybe a tertiary signal to customer retention). Content classification on the other hand, was something the company was actively spending money on (in this case: hiring dozens of subject-matter-experts to manually classify the content). The benefit to automating this was obvious.

After our initial conversation with them in June, we didn’t speak to them for 3 months besides some intermittent progress updates. Finally in early/mid August, we had a model working which cleared our internal thresholds for classification accuracy. That’s when all hell broke loose.

Every metric we’d agreed on was up for debate, again. We were novices, and so naively, had assumed that after reaching alignment with the company in June - that what we were building had definite value - we could go ahead, do the task, and get paid for it. However, instead of structuring it as a service contract, where we would get paid for the time we put into building the model, regardless of whether the company ended up using it or not, we’d tried and structured it as an ‘acquisition’, where the company would pay us for the value of our product.

Key Learning #2: When working on AI/ML stuff, set a very low bar for expectations.

A big disagreement we’d had with the company was whether a model with 75% accuracy for content classification was still valuable. The underlying assumption being that human experts had a 100% accuracy for classification, and if you still needed a human to review all the model predictions, what would the value of the model be ?

Switching tacks, we’d started pitching it as an ‘assistant’ to the classifier, instead of a complete replacement. To test the value out, we built a classification platform, to compare how accurate human experts without the model were vs. human experts with the model providing recommendations. Simultaneously, we began reaching out to other companies, investors, etc. Whoever would reply to our emails or take our calls, to make sure we had a backup in case the original company didn’t end up going for our model.

The thing that confused us was why companies weren’t building these automated classification tools internally. If a couple kids in college could build this, why weren’t these massively funded hyper-growth startups able to solve this problem. The way we saw it, there was no way we could build a company around what we’d built - each company had its own content hierarchy structure (think: the way KhanAcademy might classify a video vs. Udacity). And while there might be some initial value to this, at some stage in their development a company just replace you with some internal tool. Again, what we’d build wasn’t some insane technological innovation. If it took some kids 3 months to build this tool, what could a team of engineers, working on this full-time achieve ?

Weren’t we following the axiom of ‘selling shovels to miners’ ? Ed-tech was on a tear, and instead of trying to compete with all these companies head-on, we were trying to build the plumbing that could power them all. I really struggled at that time, to understand a) why somebody would buy this from us vs. building internally and b) where we were failing in our understanding of the axiom.

We needn’t have worried though, because as it turned out, even the human experts were terrible at content classification. The overall accuracy of a human expert ended up being ~65% while the model was ~75% (funnily enough, people with the model recommendations actually slightly underperformed their counterparts without any assistance, but the gap was ~2% so not a massive change).

We had spent 3 months on this model. We had built an entire classification platform to run the tests and demonstrate our value, in a weekend. We’d had countless discussions with the company, never even reaching the subject of money, since our value was always under question. However, we’d finally decisively shown how awesome of a thing we’d built here. My team and I were incredibly tired, and ready to go back to college in the fall.

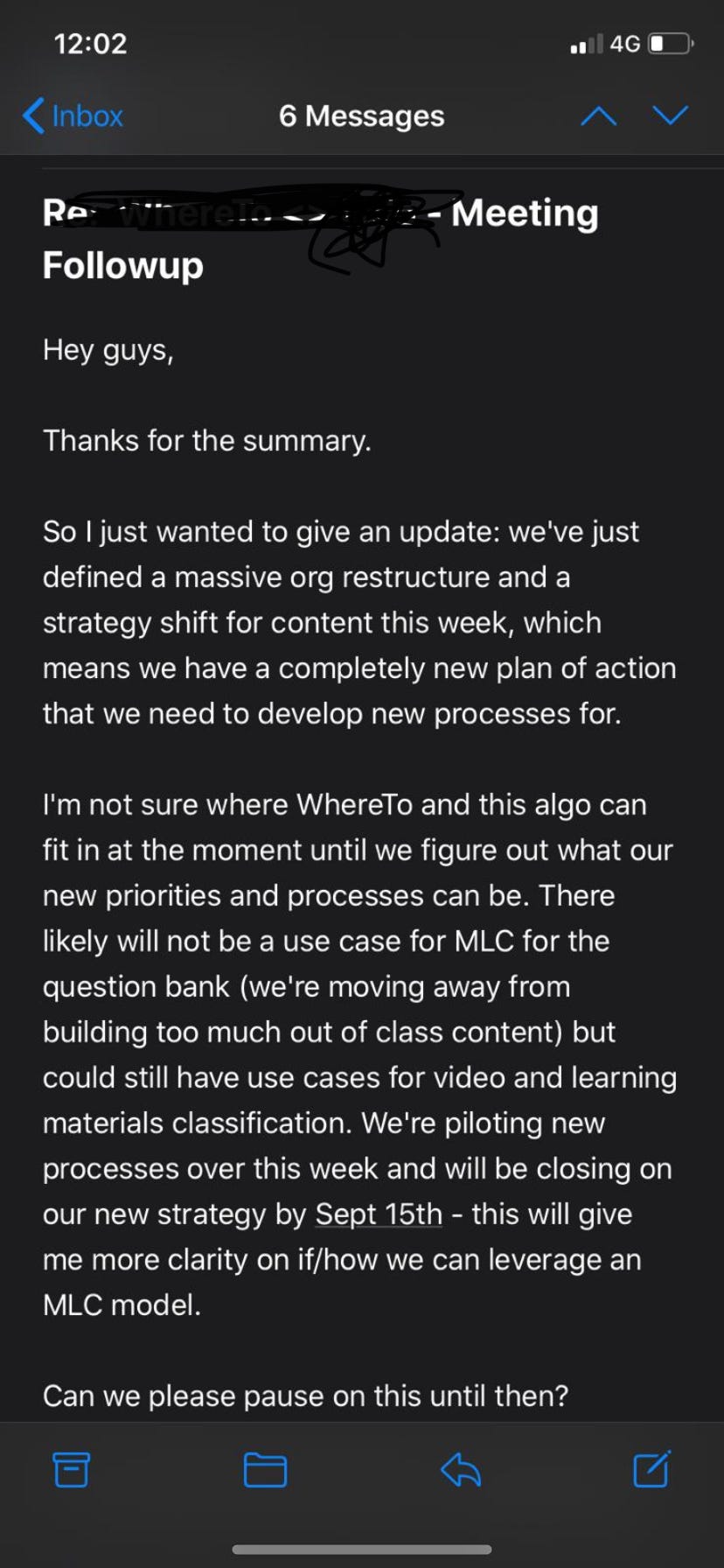

Except, that’s never how things end. 2 days after our final call, where we agreed on a price, and I called up all my teammates and shared the news, the company let us know that they’d - almost overnight - changed their entire content strategy. They saw their burn on content acquisition as too high, and instead were pivoting to a more content-light model. What’s the need for a model to automate content classification, if there is no content to classify ?